The Art of Connection - How Threat Actors Exploit Professional Networks

The Coalition of Cyber Investigators discuss how organised crime groups are weaponising LinkedIn as way of stealing personal information and credentialising sophisticated frauds such as boiler room investment scams.

Paul Wright & Neal Ysart

8/18/202518 min read

How Threat Actors Exploit Professional Networks

Disclaimer and Educational Context

The article is meant for general educational and informative use only and does not offer legal or professional advice. The analysis presented in this article is not a report of evidence but a descriptive summary of the observed patterns and procedures. While an effort has been made to be accurate, readers are advised to consult qualified professionals on individual security matters. Notice that some of the provided URLs and profiles may be non-functional, as threat actors have the habit of dismantling compromised accounts upon use.

INTRODUCTION: THE PROFESSIONAL NETWORK UNDER SIEGE

Professional networking platforms, especially LinkedIn, are increasingly attractive targets for cybercriminals aiming to steal personally identifiable information (PII) or execute sophisticated frauds such as investment scams. What was once a secure space for personal and professional development and networking is now a hunting ground for sophisticated attackers who exploit the trust within professional communities.

The Coalition of Cyber Investigators believes that LinkedIn, as the world’s largest professional networking platform, holds a unique position of trust and influence alongside a clear duty of care to protect its users from the growing epidemic of fake and cloned accounts. These fraudulent profiles not only undermine the integrity of professional interactions but also expose individuals and organisations to significant risks, including social engineering, data theft, and reputational harm.

The growth of fake LinkedIn profiles offers more than simple impersonation – it enables a structured form of social engineering that uses professional reputation to extract sensitive data from unsuspecting users. These fraudulent trends have far-reaching consequences, extending beyond individual privacy to impinge on corporate security and the integrity of professional networks.

Kevin Mitnick, the author of one of the most iconic books on social engineering, “The Art of Deception,” points out that criminals “often use authority or rank in corporate hierarchy as a weapon in their attacks on businesses.” It appears that LinkedIn has emerged as the perfect platform to exploit this vulnerability - professional networking can help credentialise a bogus persona.

This article spotlights the ease with which organised criminals can exploit LinkedIn and explains how to identify and investigate fake profiles using basic open-source intelligence (OSINT) and investigative techniques.

DEFINING FAKE LINKEDIN PROFILES

Fake LinkedIn profiles encompass a broad spectrum, ranging from entirely fictitious to real individuals with overly exaggerated credentials. These profiles often showcase fabricated work experience, education, and endorsements, all aimed at creating a misleading impression of professional expertise.

The motives behind such fraudulent accounts are varied and increasingly sophisticated. They range from phishing schemes designed to obtain corporate login details to extensive data mining efforts that expand marketing databases. These efforts often support identity theft operations based on hijacked personal information, with more advanced actors conducting corporate espionage through careful infiltration of professional networks.

Notably, The Coalition of Cyber Investigators has observed a significant rise in bogus LinkedIn accounts being used to credentialise boiler room investment fraud operations, making fictitious brokers and investment firms appear credible. Worst of all, however, has been the growth of advanced social engineering attacks that exploit the inherent trust in professional relationships.

FIRST STEP: PROFILE ANALYSIS

Our investigation into suspicious LinkedIn accounts uncovered a clear pattern of fraud by systematically analysing several key indicators. The most obvious red flag was the consistently low number of connections (see Screenshot 005 and 008) on these accounts, which indicated that they had not yet built the typical professional networks of genuine users.

Behavioural studies uncovered shocking similarities in user behaviour patterns, particularly in respect of reposting activities. The accounts exhibited nearly identical sharing patterns, which suggested computerised or orchestrated control rather than actual professional participation. This continued in the content, whereby job categories and positions were duplicated verbatim across accounts without any of the natural variations normally associated with actual, real professional life.

The lack of authenticity of such profiles was more apparent while examining their professional backgrounds. Experience sections with identical or eerily similar employment histories (see Screenshots 015 and 016) point to template-based profile building. Academic backgrounds were the same, with some profiles indicating study at the same institutions, with identical information that pointed to a copy-paste mechanism rather than authentic records of educational achievement.

Skills sections offered additional evidence, with identical entries appearing on several profiles without the personalisation typical of real-life professional development. Likewise, listings in interests were uniformly suspicious, as if taken from a pre-prepared list rather than genuine professional interests or influences.

Perhaps most revealing was the lack of social proof elements typical in genuine professional profiles. None of the suspected profiles were endorsed by colleagues or managers, nor had they endorsed others. Furthermore, the endorsement feature for skills, which usually fosters authentic validation among relevant professionals, was disabled on these profiles.

This pattern of similarities between many data points and the absence of professional networking characteristics offered strong evidence that threat actors had artificially created these accounts.

THE EVOLVING THREAT LANDSCAPE

Recent research has uncovered alarming trends in creating and using fake profiles. Sophisticated threat actors now employ advanced techniques to craft authentic-looking professional profiles that can deceive even security-aware users. The information gathered through these methods is often traded on underground markets, putting countless individuals at risk of identity theft and financial scams such as boiler room investment fraud.

These attacks progressed from primitive catfishing schemes to sophisticated, multi-level assaults that merge old-school social engineering with new-school data collection methods. LinkedIn's professional environment increases the effectiveness of attacks since people tend to more easily trust those appearing as coworkers and business contacts.

FIVE COMMON THREAT ACTOR METHODOLOGIES

In this section, we examine five distinct threat actor methodologies, each illustrated with real LinkedIn profiles that have been - and in some cases, may still be - actively used to pursue criminal objectives.

Some readers may even find that they are connected to these deceptive profiles, highlighting just how pervasive and convincing this type of threat can be within professional networks.

The Coalition of Cyber Investigators recommends that readers double-check their connections against any profiles highlighted as fake.

METHODOLOGY 1: MASS PROFILE CLONING

One of the most prevalent techniques involves creating multiple profiles with identical or nearly identical content. This approach allows threat actors to cast a wide net while maintaining operational efficiency.

Case Study: The Deloitte Recruitment Scheme

Analysis of suspicious profiles revealed a coordinated campaign targeting the Forensic Tech, Intelligence (OSINT), and Investigations LinkedIn group. Multiple profiles claimed employment at Deloitte, all with the following identical job description:

"Recruitment and Talent Development | Performance Management | Compensation and Benefits | Employee Relationship Management | Legal Compliance."

Investigation revealed several profiles using this exact phrase, including:

A bogus profile in the name of Angelica Alexandre (profile no longer live)

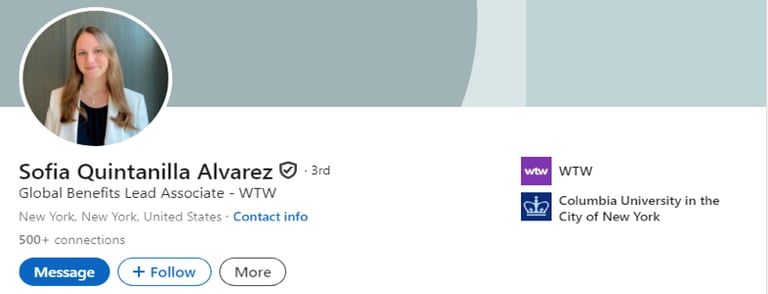

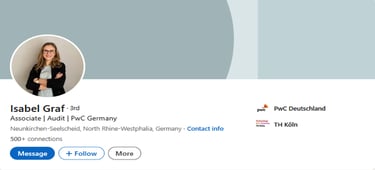

However, Reverse Image Analysis of Angelica Alexandre's profile picture quickly identified the genuine owner of that cloned image, as can be seen in Screenshot 001 below (this profile was still live on 03/08/2025).

A bogus profile in the name of Rosemarie Vetter (profile no longer live)

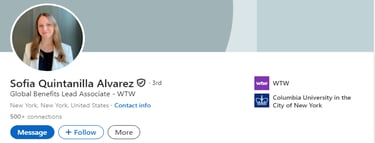

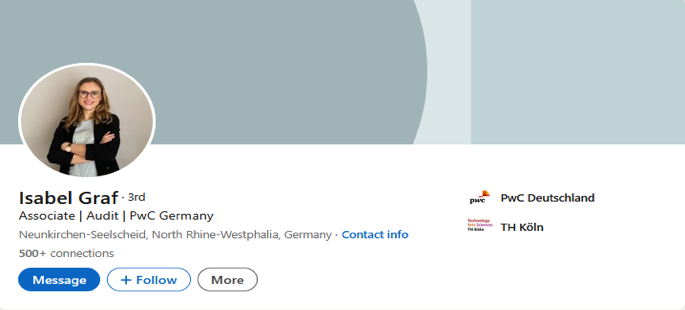

However, Reverse Image Analysis of Rosemarie Vetter's profile picture also quickly identified the genuine owner of that cloned image, as can be seen in Screenshot 002 below (this profile was still live 03/08/2025).

Additional fake profiles were also identified in the names of:

Teri A. (profile no longer live)

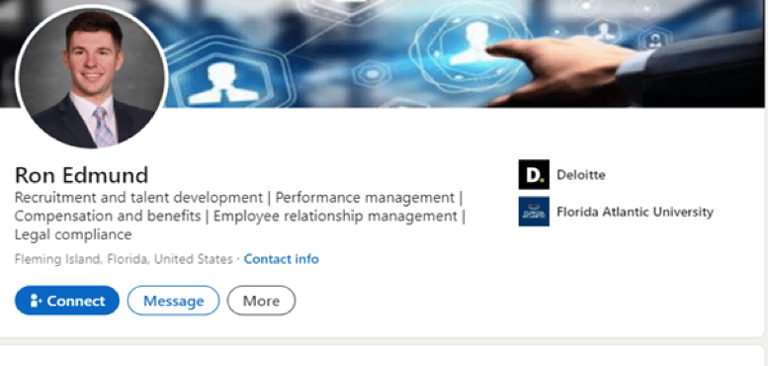

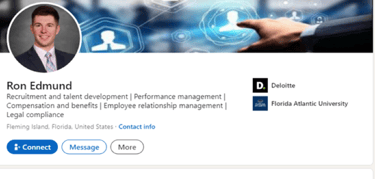

Ron Edmund (profile no longer live), and

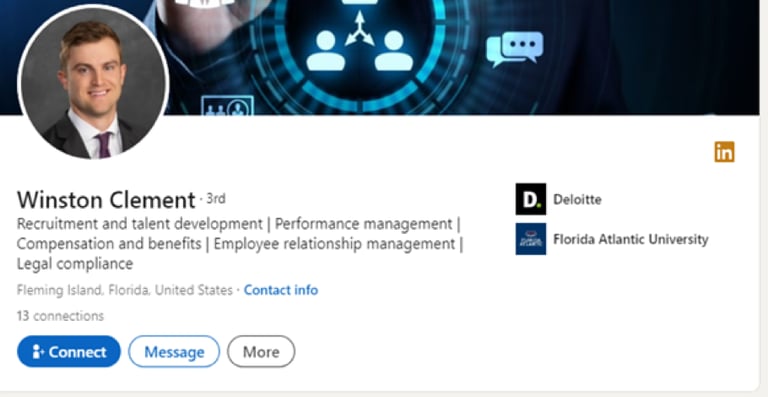

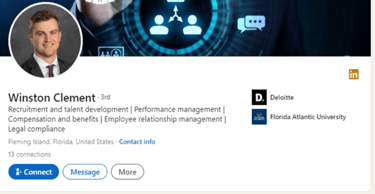

Winston Clement (profile no longer live

Screenshot 3 below shows the bogus profile of Ron Edmund, complete with the fake job description.

Screenshot 001: Reverse Image Analysis IDENtiFIED genuine profile

Screenshot 002: Reverse Image Analysis IDENTIFIED genuine profile

Screenshot 003: showing a fake profile

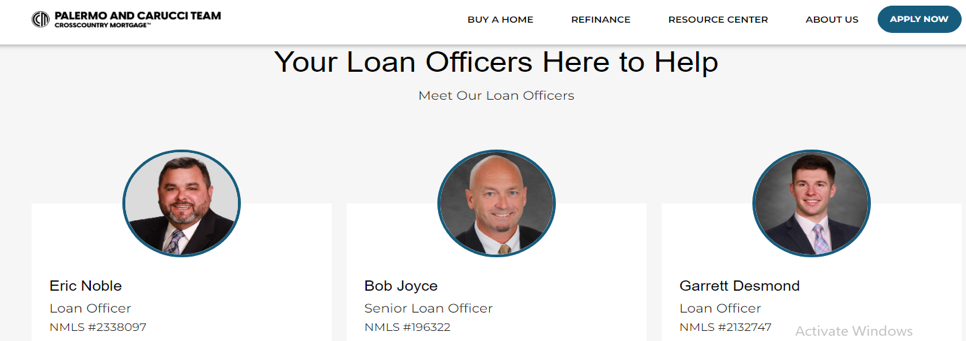

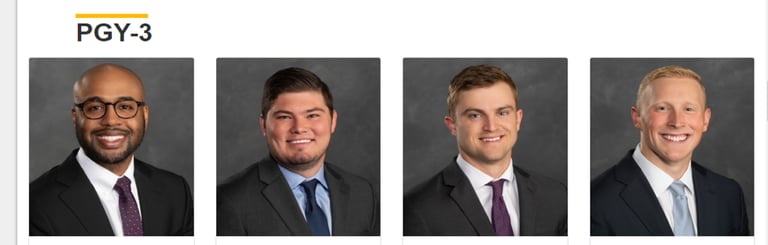

However, Reverse Image Analysis quickly identified the genuine owner of that cloned image, as can be seen in Screenshot 004 below.

Screenshot 004: Showing genuine profile picture (live 03/08/2025)

The fake Winston Clement profile in Screenshot 005 below also contained the same standardised job description and a cloned image from a genuine profile, which was identified using reverse image analysis and can be seen in Screenshot 006.

Screenshot 005: Fake profile of Winston Clement (no longer live 03/08/2025)

Screenshot 006: https://orthopaedics.vcu.edu/education/residency-program/current-residents/ (live on 03/08/2025) showing the genuine owner of the fake profile picture

Our analysis revealed a troubling pattern of similarities among these fraudulent profiles.

Each account showed identical job descriptions and experience histories, all claiming the same educational background from the University of Montana, with precisely matching wording.

The skill listings and interest sections were nearly indistinguishable between profiles, while connection counts remained suspiciously low across all accounts.

Perhaps most notably, there was an absence of recommendations or endorsements on any profile, coupled with eerily similar repost activity patterns that indicated coordinated management.

METHODOLOGY 2: HIJACKED CONVERSATION INSERTION

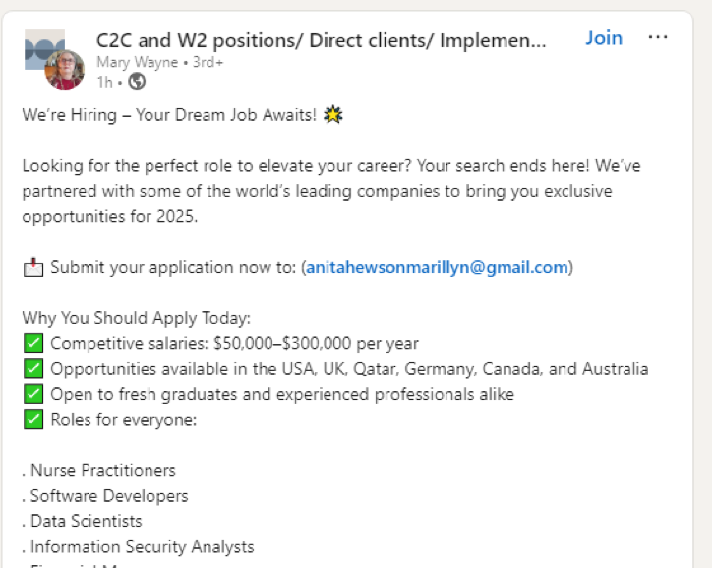

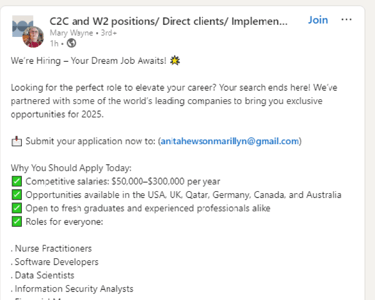

This sophisticated technique involves genuine or recently created profiles inserting fraudulent job opportunities into LinkedIn conversation threads.

In this exploit, threat actors monitor active discussions and interject comments with compelling job offers, designed to entice victims to respond, with the ultimate aim of extracting personal information from them.

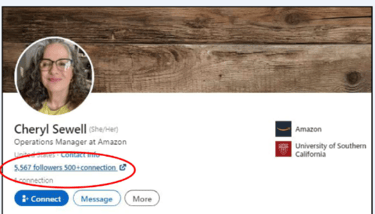

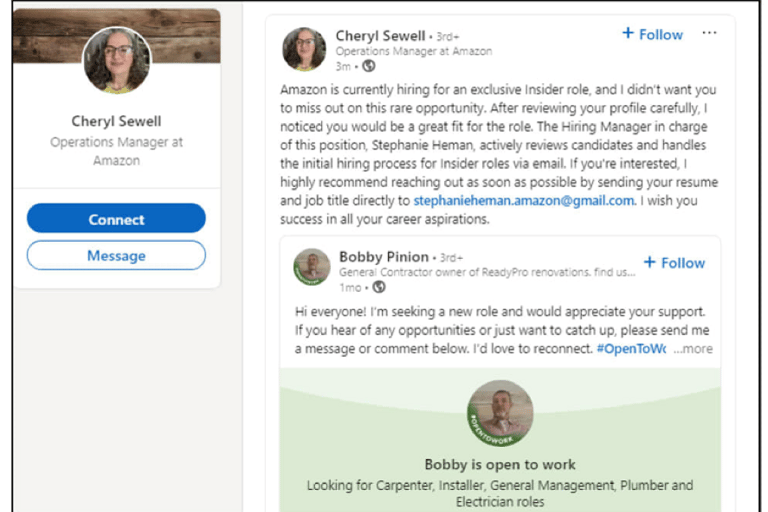

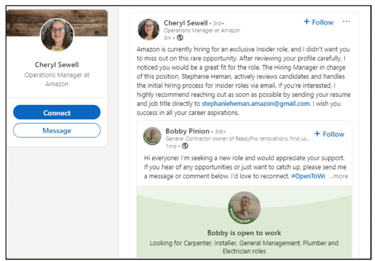

Case Study: The Amazon Insider Role Scam

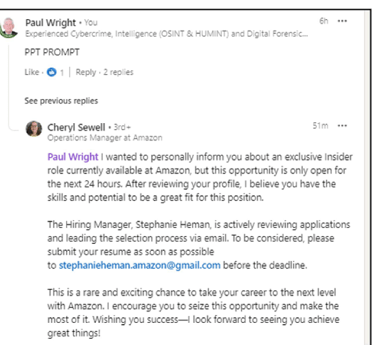

Fake profiles would comment on unrelated posts with messages such as:

“[Victims Name], I wanted to inform you about an exclusive Insider role available at Amazon. This opportunity is only open for the next 24 hours… To be considered, please submit your resume as soon as possible to stephanieheman.amazon@gmail.com before the deadline.”

An example of this can be seen in Screenshots 007 and 010 below.

Screenshot 007 – Amazon insider role scam

This sophisticated approach exploits multiple psychological vulnerabilities simultaneously. The artificial time constraints generate a sense of urgency that sidesteps rational decision-making, while the promise of exclusivity indicates that the target has been specially chosen for this opportunity.

The authority implied by mentioning major corporations like Amazon adds credibility to the deception, and the placement within relevant professional discussions leverages the trust users naturally have in their professional network conversations.

Additionally, the use of bogus Gmail accounts such as stephanieheman@gmail.com is another common denominator, as can be seen in this and other scams.

METHODOLOGY 3: PROFILE LINK MANIPULATION

With this technique, advanced threat actors manipulate LinkedIn’s URL structure to obscure their tracks and create false legitimacy signals. Analysis of suspicious profiles reveals systematic misuse of LinkedIn’s linking system.

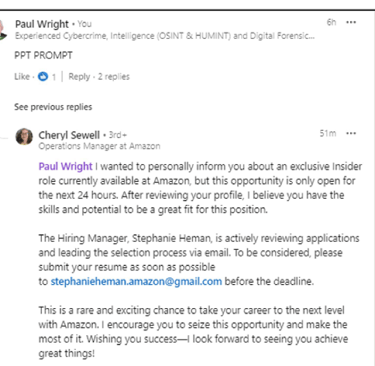

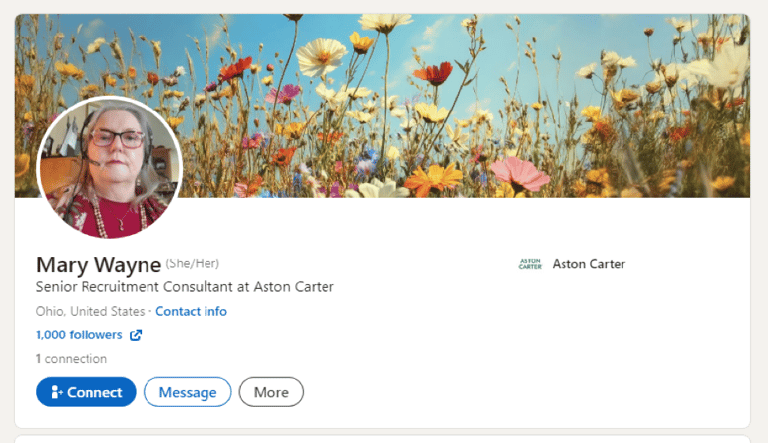

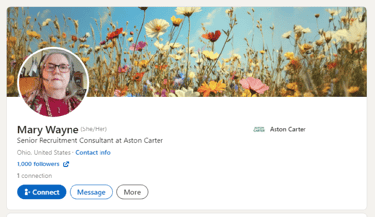

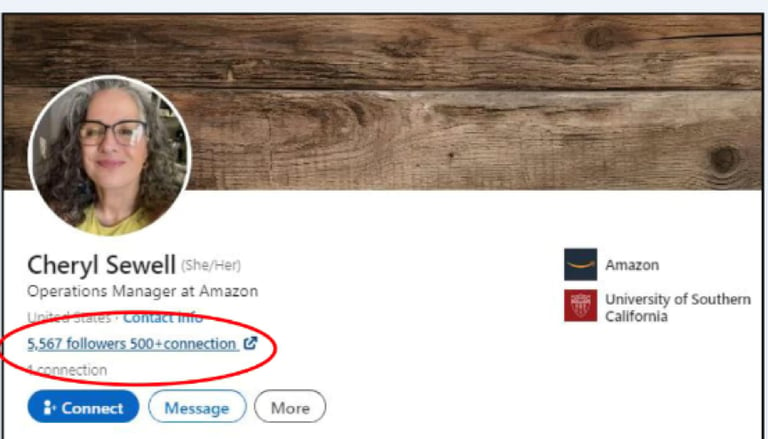

Case Study: Mary Wayne and Cheryl Sewell

Both accounts were participants in the Amazon job scam.

Screenshot 008 below shows the bogus profile of Mary Wayne, which is no longer live.

However, when the profile URL was available, it could be found at: https://www.linkedin.com/in/mary-wayne-a4269b362/.

The numeric slug at the end of the URL (a4269b362) is a unique identifier allocated by LinkedIn, indicating an auto-generated or recently created account.

Screenshot 009 shows the fake Amazon job advertisement posted by Mary Wayne.

Cross-analysis revealed connections between Mary Wayne’s profile and another fake profile promoting the Amazon job scam: Cheryl Sewell’s profile.

Both used Gmail-based collection schemes and shared identical messaging patterns. However, even more concerning was the discovery of manipulated profile links directing to external domains in the URL link next to the number of followers on each profile (see Screenshot 008, 010, and 012).

In addition, Screenshot 010 shows Cheryl Sewell’s fake profile, and Screenshot 011 shows a copy of her fake Amazon job advert.

Screenshot 008: Fake profile of Mary Wayne https://www.linkedin.com/in/mary-wayne-a4269b362/ (profile no longer live)

Screenshot 009 – Fake job advert posted by Mary Wayne

Screenshot 010 – Fake profile of Cheryl Sewell

Screenshot 011 – Fake job advert posted by Cheryl Sewell

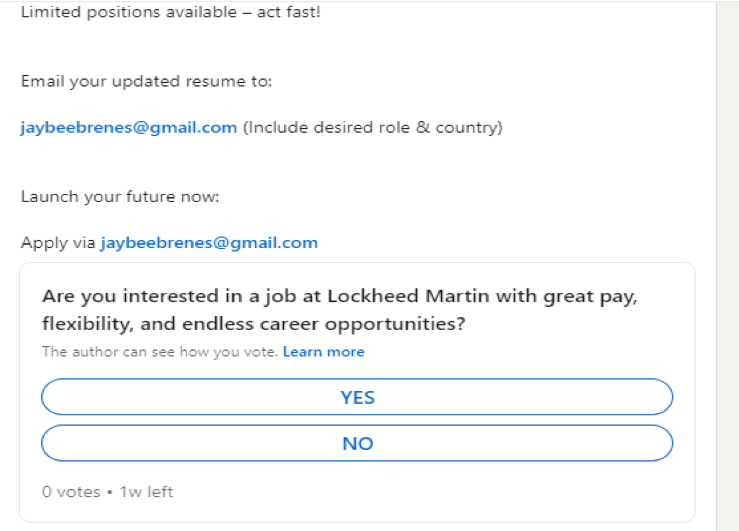

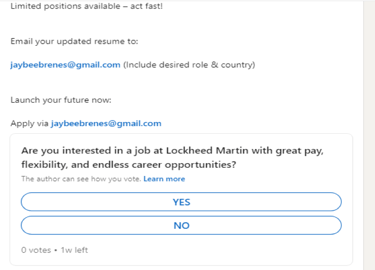

METHODOLOGY 4: INTERACTIVE CONTENT EXPLOITATION

In this exploit, threat actors take advantage of LinkedIn’s interactive features, particularly polls and surveys, to harvest personal information under the guise of professional engagement.

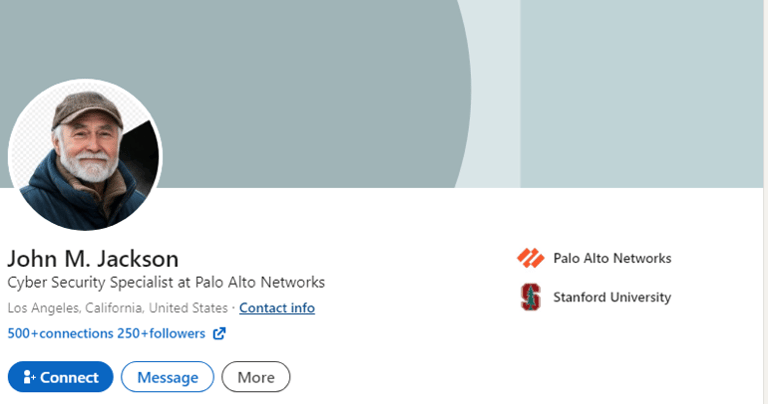

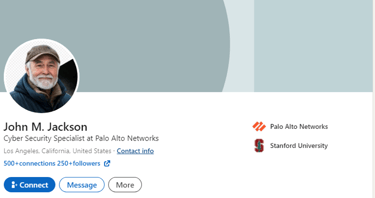

Case Study: John M Jackson’s Polling Scheme

Analysis of another fake profile in the name of John M Jackson (see Screenshot 012) revealed a systematic use of LinkedIn polls (see Screenshot 014) to gather PII. The profile showed several typical signs that immediately triggered suspicion among security experts, including:

The newly created account had LinkedIn’s characteristic auto-generated URL structure, indicating quick setup rather than natural account development

External communication was consistently routed through Gmail addresses instead of corporate email systems, for example, jaybeenbrenes@gmail.com

The polls were carefully designed to extract specific types of personal information

Despite active posting, the account exhibited unusually low engagement rates, suggesting fake activity or audience scepticism

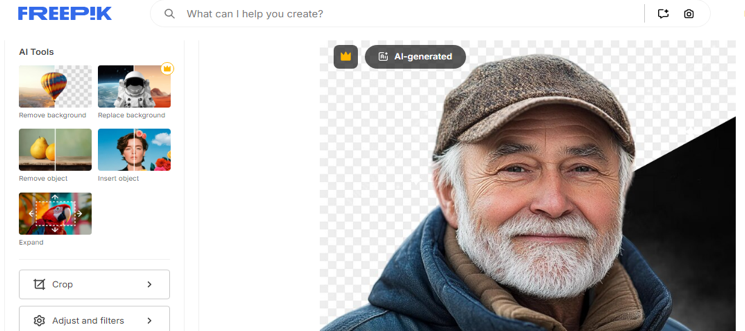

A reverse image search identified the profile image as originating from a well-known stock image website (see Screenshot 013)

Screenshot 012: Fake profile of John M. Jackson https://www.linkedin.com/in/john-m-jackson-a733a6362/ (no longer live)

Screenshot 013 - Genuine stock photo identified via reverse image search

Screenshot 014 – Bogus survey designed to credentialise fake posts and gather PII

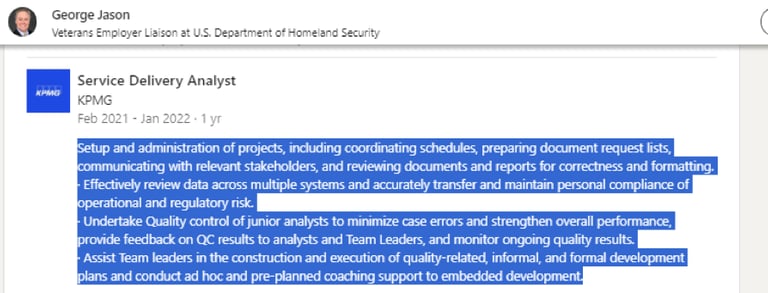

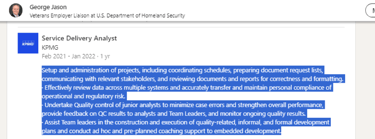

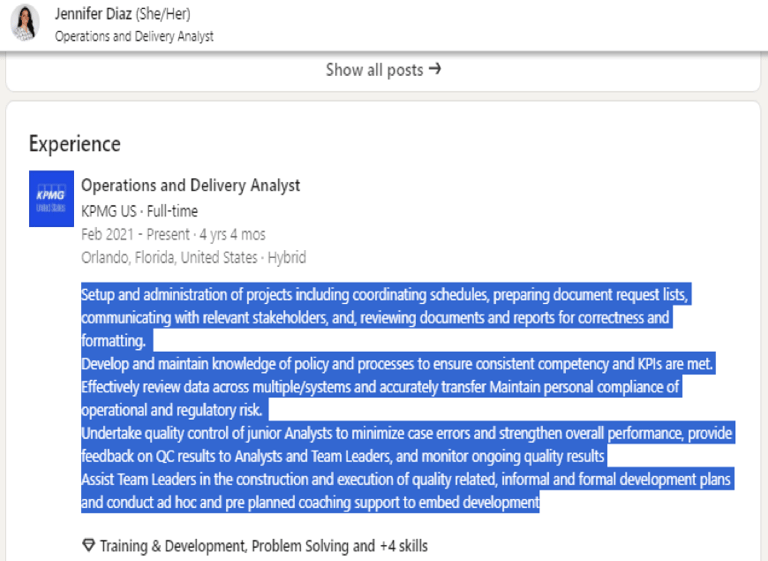

METHODOLOGY 5: CONTENT TEMPLATE RECYCLING

Our investigation revealed that threat actors are routinely recycling profile content across multiple fake accounts, creating networks of related fraudulent profiles.

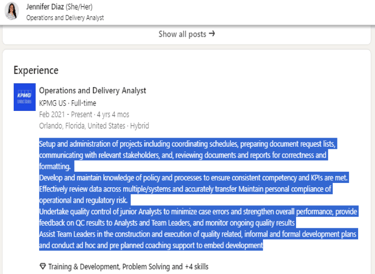

Case Study: George Jason and Jennifer Diaz Content Overlap

The analysis of the fake profile of George Jason (see Screenshot 015) revealed significant content overlap with that of another fake profile of Jennifer Diaz (see Screenshot 016), including identical “About” sections and professional descriptions.

This recycling approach allows threat actors to maintain multiple personas while minimising content creation efforts.

Screenshot 015 – Fake profile of George Jason

Screenshot 016: Fake profile of Jennifer Diaz https://www.linkedin.com/in/jennifer-diaz-a7687211b/ (still live 03/08/2025)

REVERSE IMAGE ANALYSIS – A KEY OSINT TECHNIQUE FOR PROFILE VERIFICATION

Reverse image analysis is one of the most impactful techniques in OSINT-led LinkedIn profile verification. Used correctly, it's a force multiplier, but it should be deployed alongside a range of other techniques. Combining it with further procedures, including metadata checks, Google Dorking (advanced search operators), cross-platform identity correlation, Geolocation/Chronolocation, and network mapping, helps enable multi-source verification and can significantly improve confidence in any conclusions that can be drawn. Temporal analysis is also an important procedure to consider in conjunction with reverse image queries, as LinkedIn's filename structure for uploaded images contains UNIX timestamps that can reveal account creation patterns and help investigators map coordination between multiple profiles.

Multi-method workflows and when to apply each step will be covered in a forthcoming detailed guidance document to be published by The Coalition of Cyber Investigators. For now, this article will focus on reverse image searching only, with the caveat that verification through other sources is non-negotiable.

Reverse Image Analysis

As can be seen from our analysis of the methodologies above, reverse image searching is one of the most effective methods for identifying fake profiles. Professional headshots in fraudulent profiles often originate from stock photo websites or corporate directories or are cloned from genuine social media accounts.

Technical Approach:

A basic workflow to help identify profiles that are fake profiles could include the following steps:

Submit profile images to reverse image search engines (see Screenshots 001, 002, 004, 005 and 012)

Cross-reference results with stock photo databases

Analyse image metadata for creation timestamps (see Screenshot 008)

Check for AI-generated content indicators

Profile content analysis, as detailed above

Case Examples:

Ron Edmund’s fake profile image was traced to amrcaustin.com (see Screenshots 003 and 004)

Winston Clement’s fake profile photo was found on the VCU Orthopaedics Residency page (see Screenshot 005 and 006

John M Jackson’s fake profile used an image from a professional stock image site (see Screenshot 013 and 014)

Cross-Platform Verification

To support multi-source verification and add greater confidence to reverse image analysis, cross-platform analysis techniques can help corroborate identity signals. Legitimate professionals usually maintain a public online presence beyond just their LinkedIn profiles. Verification methods include thorough searches for mentions in authoritative and attributable sources such as industry publications and trade journals, official corporate directories and staff listings, conference participation records and speaking engagements, and activity patterns on other professional platforms like industry forums or academic networks. Triangulating data from such sources with reverse image analysis results in an approach which builds a network of verification that fraudulent profiles find difficult to imitate convincingly.

Network Analysis

Connection pattern analysis often reveals clear signs of fraudulent LinkedIn account management. Suspicious profiles frequently show unusual clusters of connections across unrelated industries, indicating random rather than natural professional relationship building. Overall connection counts tend to remain surprisingly low for individuals claiming senior professional positions, while their posted content attracts minimal meaningful engagement from their supposed network. Perhaps most telling is the persistent absence of recommendations or endorsements, key indicators of genuine professional relationships developed over time.

RISK AND IMPACT OF ENGAGEMENT WITH FAKE PROFILES

Personal risks

While engaging with impersonated profiles, individual users become vulnerable to privacy and security risks. This includes threats to data security, such as sophisticated phishing attacks and malware software that compromise personal devices and accounts.

The PII gathered via the fake profile is typically employed as the building block of identity theft, in which offenders employ hijacked details to establish accounts, request credit, or conduct financial fraud in the victim's name.

Additionally, reputational damage is caused when real professionals are associated with fictitious profiles, potentially tarnishing their credibility among employers, clients, and colleagues.

Perhaps most immediately concerning are the economic losses caused by fraudulent job postings designed as advance fee fraud schemes, in which victims are induced to pay upfront fees for jobs that never exist.

Institutional risks

Organisations face even more complex and potentially disastrous consequences from sophisticated social engineering attacks against employees.

Corporate espionage is perhaps the worst threat, where hostile profiles can methodically infiltrate internal systems through trusted business relationships, commandeering sensitive business intelligence and strategic planning information. This threat has come to public awareness in a major way with multiple reports of North Korean agents using stolen identities and credentials of US citizens to land remote IT jobs in US technology and defence companies. In the same vein, opponents can leverage social engineering to steal intellectual property, confidential research, development plans, and proprietary methods that can help adversaries gain a competitive advantage.

Brand reputation is also extensively damaged when organisations get associated with fraudulent activities, both as impersonated victims and targets of successful attacks that are made public. Additionally, organisations may suffer extreme compliance violations and regulatory penalties if data breaches occur due to social engineering attacks that easily bypass security measures by exploiting human vulnerabilities.

Defensive Strategies and Best Practices

Although it is possible to detect fake accounts, as the evidence above suggests, expect them to refine and improve their scams over time. Criminals evolve quickly and adapt to changes in circumstances far faster than those on the right side of the law can react. It would, therefore, be complacent to assume they will always be so obvious, especially given the increasing adoption of technology, particularly AI, to make the creation of convincing fakes easier.

Individual LinkedIn users should utilise several pragmatic confirmation methods to prevent fake profile intrusions.

1. Users should carefully screen mutual contacts before accepting connection requests by contacting these contacts directly to verify the legitimacy of the requesting profile.

2. Reverse searching profile images can quickly identify stock or stolen images being posted on multiple fake profiles.

3. Verifying professional claims against third-party sources such as company directories, conference speaker lists, or trade publications provides additional validation layers.

4. Examine profile completeness and internal consistency for inconsistencies in work history, education, or skill claims indicative of fabrication.

5. Monitoring suspicious message patterns, especially unsolicited career opportunities or requests for personal information, can detect social engineering attempts before they are successful.

Organisations should implement comprehensive countermeasures to address social engineering attacks' human and technical components. At a minimum these should include:

1. Security awareness training which specifically targets social engineering threats, alerting employees to the sophisticated methods used in professional networking contexts and providing them with practical skills for detecting suspicious engagement.

2. Implementing defined verification procedures for releasing sensitive information to help guarantee that employees have the same processes to observe when they are called by strangers or suspicious persons purporting to be professionally associated with them.

3. Reviewing employees' LinkedIn activity for signs of targeting to help security teams discover emerging threats early, before they affect the organisation.

4. Developing comprehensive incident response guidelines for probable social engineering attacks to enable swift containment and investigation when threats are found, minimising possible damage and providing valuable intelligence to avert future attacks.

CONCLUSION

The industrial-scale creation of spoofed LinkedIn profiles significantly shifts cybercriminals' approach from opportunistic fraud to professional-grade, organised social engineering campaigns.

Reported incidents and the examples in this article show increasingly sophisticated techniques that clearly weaponise LinkedIn’s core attributes - trust, opportunity, and career advancement - to bypass security controls by targeting people instead of perimeter defences.

The coordinated nature of these activities points to organised criminal campaigns instead of random events.

As these threats evolve, the application of OSINT procedures, digital forensics and cybercrime investigation techniques in uncovering and counteracting artificial profiles cannot be overemphasised. The combination of technical analysis, behavioural assessment, and cross-platform verification provides a sound basis for maintaining professional network trust.

However, this is not just a user‑hygiene problem; it is a platform‑safety problem. As the custodian of the world’s largest professional network, LinkedIn has a duty of care to ensure its design choices do not enable abuse at scale.

The volume, persistence, and coordination of fake accounts indicate that LinkedIn’s current controls are inadequate and reactive. There is not enough friction at account creation, and there is virtually no identity validation process. Given that LinkedIn is a technology company, it is not unreasonable to expect it to deploy analytical solutions to identify fake and repetitive profiles and content.

At a minimum, potential measures for LinkedIn should include:

Stronger identity verification at account creation (especially for recruiters and those accessing premium features

Tapered friction measures for new accounts and sudden connection bursts

Image‑provenance checks and stock/duplicate detection

Robust bot and cluster detection

A transparent reporting and feedback process with faster, measurable takedown service level agreements (SLAs)

In the future, individuals and organisations must acknowledge that professional networking websites are legitimate attack vectors that demand the same level of security as any other digital asset. While they are valuable for genuine networking, the inherent trust in professional relationships also introduces risks that more sophisticated threat actors are increasingly prepared and capable of exploiting.

Importantly, LinkedIn must fulfil its obligations by making the world’s largest professional networking platform significantly harder to exploit. Until it does, the integrity of professional networking - and the safety of the people and companies who depend on it - will remain needlessly at risk.

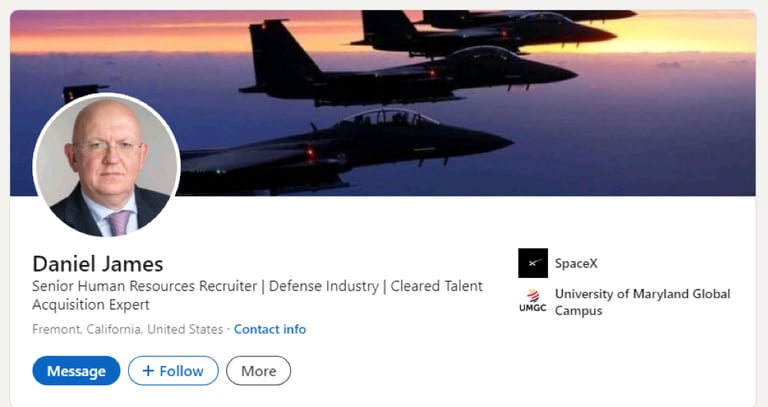

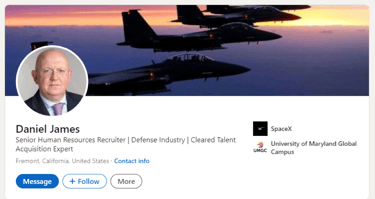

STOP PRESS

In the hours post the creation of this article, The Coalition of Cyber Investigators has identified a new cluster of high‑profile SpaceX‑related fake accounts exhibiting the same tradecraft outlined in this article.

Follow the trail below:

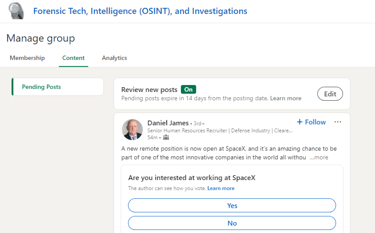

Screenshot 017 - Fake profile in the name Daniel James

STEP 2

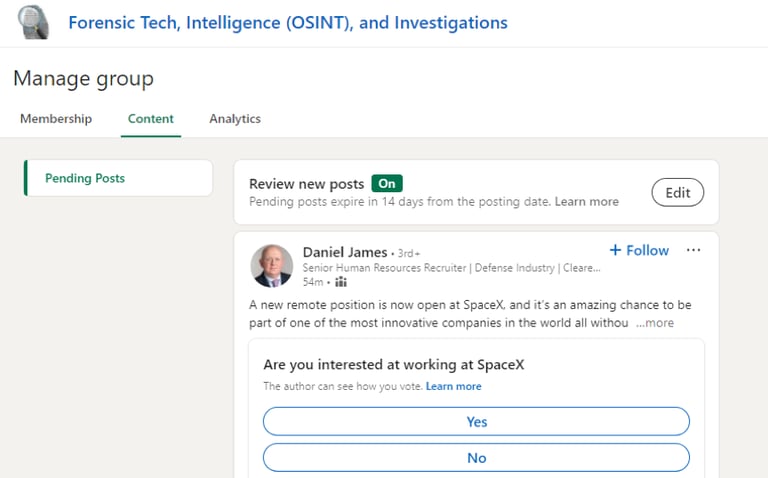

“Daniel James” attempted to post a fake SpaceX job vacancy in the Forensic Tech, Intelligence (OSINT), and Investigations LinkedIn group, as can be seen in Screenshot 018

Screenshot 018 – Attempted Job Post

STEP 3

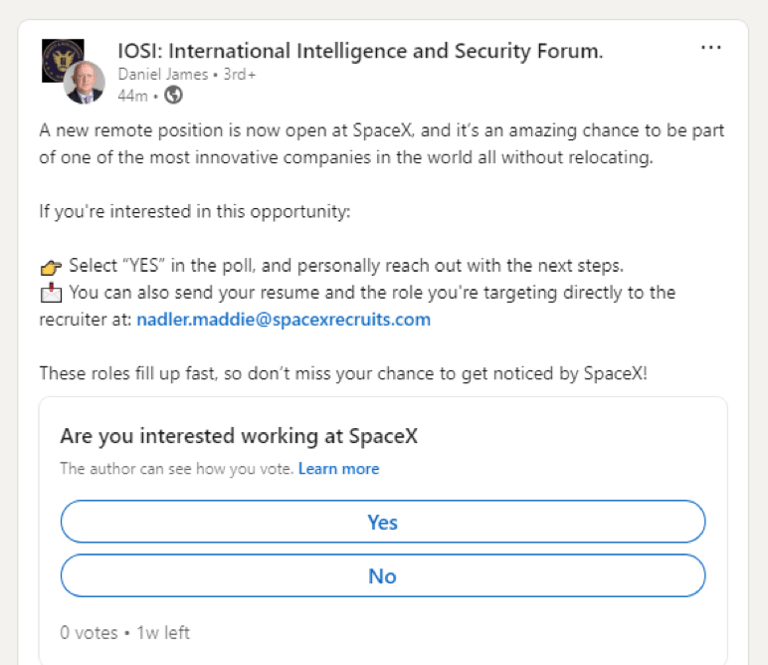

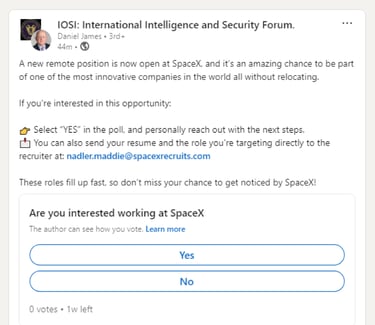

However, “Daniel James” was able to successfully post the same fake SpaceX vacancy in the IOSI: International Intelligence and Security Forum on LinkedIn, as can be seen in Screenshot 019

STEP 1

Meet the fake profile of “Daniel James” (https://www.linkedin.com/in/daniel-james-b249a8379/) in Screenshot 017 below:

Screenshot 019 – successful post of Fake SpaceX Job

STEP 4

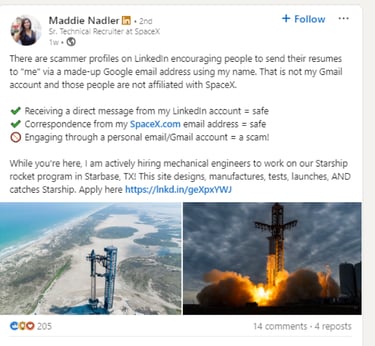

In Screenshot 019 above, a fake email address, but prefixed with the name of a real SpaceX recruiter - Maddie Nadler - can be seen nadler.maddie@spacexrecruits.com

STEP 5

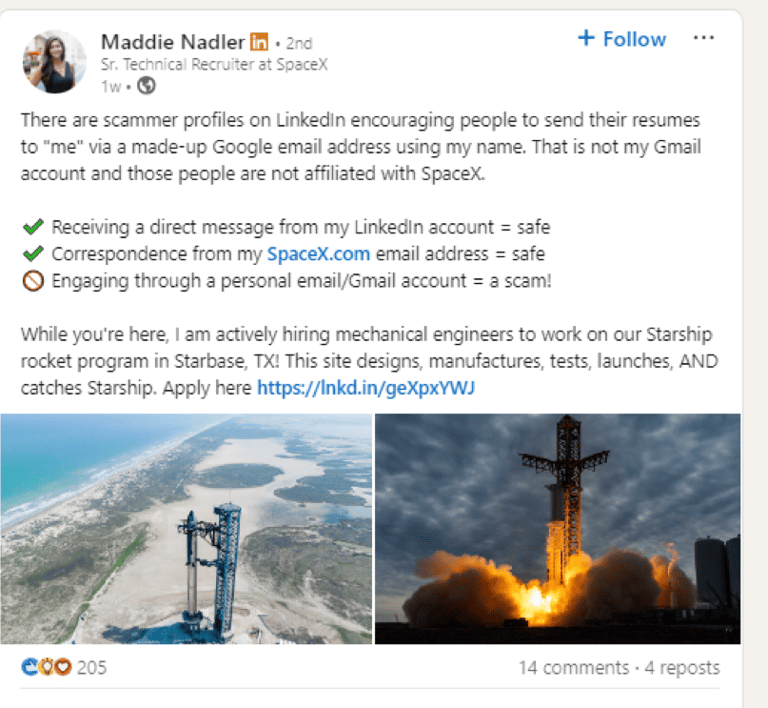

Commendably, this post was spotted by the genuine Maddie Nadler of SpaceX, who posted a message in response, which can be seen in Screenshot 020. Her post below contains sensible advice about red flags to watch out for in relation to this scam. It is significant that it was an individual user who highlighted this and not LinkedIn.

Screenshot 020 – Message from a Real SpaceX Recruiter

STEP 6

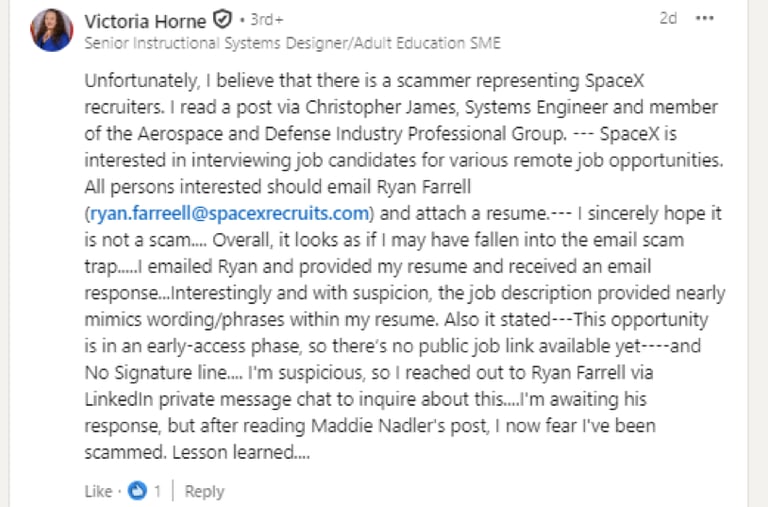

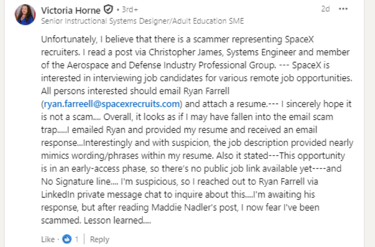

A comment was then made on Maddie Nadler’s post above, highlighting another potential SpaceX scam job advert. The poster was concerned that they had actually been the victim of this scam. See Screenshot 021

Screenshot 021 – Comment from a SpaceX Job Scam Victim

STEP 7

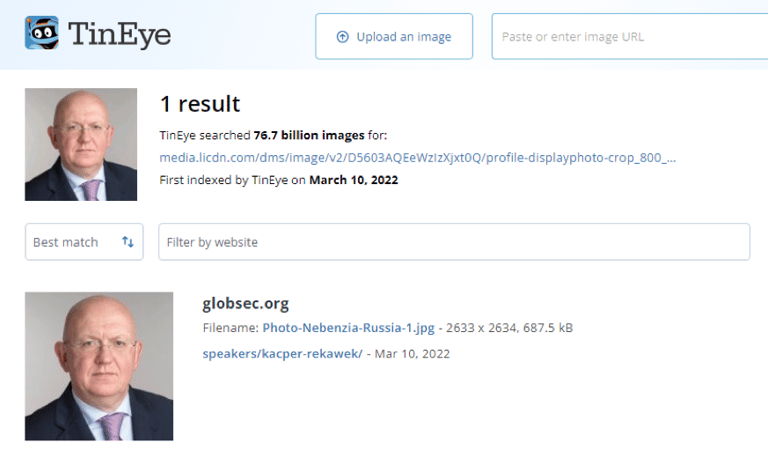

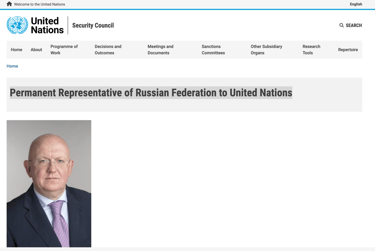

The reverse image search on Daniel James’s profile picture revealed that the image had been stolen from it’s real owner Ambassador Nebenzia Vassily Alekseevich, Permanent Representative of The Russian Federation to The United Nations Security Council.

The reverse image search that very quickly identified this can be seen in Screenshot 022

Screenshot 022 – Reverse Image Search of “Daniel James” fake profile picture

STEP 8

When looking for authoritative and attributable sources to verify findings, Screenshot 023 is as good as it gets.

Screenshot 023 – Member profile on the UN Security Council Website

We will release further details on this and other LinkedIn fake profile scams in forthcoming articles, so follow The Coalition of Cyber Investigators for future insights and guidance.

STOP PRESS END

Authored by: The Coalition of Cyber Investigators

Paul Wright (United Kingdom) & Neal Ysart (Philippines)

©2025 The Coalition of Cyber Investigators. All rights reserved.

The Coalition of Cyber Investigators is a collaboration between

Paul Wright (United Kingdom) - Experienced Cybercrime, Intelligence (OSINT & HUMINT) and Digital Forensics Investigator;

Neal Ysart (Philippines) - Elite Investigator & Strategic Risk Advisor, Ex-Big 4 Forensic Leader; and

Lajos Antal (Hungary) is a highly experienced expert in cyberforensics, investigations, and cybercrime.

The Coalition unites leading experts to deliver cutting-edge research, OSINT, Investigations, & Cybercrime Advisory Services worldwide.

Our co-founders, Paul Wright and Neal Ysart, offer over 80 years of combined professional experience. Their careers span law enforcement, cyber investigations, open source intelligence, risk management, and strategic risk advisory roles across multiple continents.

They have been instrumental in setting formative legal precedents and stated cases in cybercrime investigations and contributing to the development of globally accepted guidance and standards for handling digital evidence.

Their leadership and expertise form the foundation of the Coalition’s commitment to excellence and ethical practice.

Alongside them, Lajos Antal, a founding member of our Boiler Room Investment Fraud Practice, brings deep expertise in cybercrime investigations, digital forensics, and cyber response, further strengthening our team’s capabilities and reach.

The Coalition of Cyber Investigators, with decades of hands-on experience in cyber investigations and OSINT, is uniquely positioned to support organisations facing complex or high-risk investigations. Our team’s expertise is not just theoretical - it’s built on years of real-world investigations, a deep understanding of the dynamic nature of digital intelligence, and a commitment to the highest evidential standards.